Back Gesamentlike entropie Afrikaans اعتلاج مشترك Arabic Bedingte Entropie#Blockentropie German آنتروپی مشترک Persian Entropie conjointe French Entropia congiunta Italian 結合エントロピー Japanese Спільна ентропія Ukrainian 联合熵 Chinese 聯合熵 ZH-YUE

| Information theory |

|---|

|

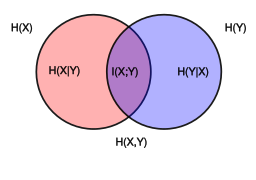

In information theory, joint entropy is a measure of the uncertainty associated with a set of variables.[2]

- ^ D.J.C. Mackay (2003). Information theory, inferences, and learning algorithms. Bibcode:2003itil.book.....M.: 141

- ^ Theresa M. Korn; Korn, Granino Arthur (January 2000). Mathematical Handbook for Scientists and Engineers: Definitions, Theorems, and Formulas for Reference and Review. New York: Dover Publications. ISBN 0-486-41147-8.